Introducing our new product for seamless chat integration and customization

In today's fast-changing world, businesses need to stay on top of their game when it comes to customer engagement. With the rise of AI and large language models, chatbots have become an indispensable tool for businesses looking to improve their customer engagement strategies. However, building and managing chat code can be time-consuming and complicated, which is why we are thrilled to announce our new product that allows businesses to integrate and customize complex chat experiences without any hassle.

ChatKitty, the easiest way to build deploy chat

When we founded ChatKitty in 2020, our goal was to create the easiest way to build chat for all types of businesses. We launched our REST API and JS SDKs to empower developers to build chat for online communication problems facing their users. However, as with all Chat API solutions currently in the market, it takes developers significant work and effort to integrate APIs and SDK function calls into their apps. Developers still need to read and understand deeply technical documentation, write a significant amount of code, and handle complex chat interactions. It also requires a lot of additional effort to integrate third-party functionality like emails, push notifications, SMS, and, yes, conversational AI chat -features that businesses building customer engagement platforms need.

We've begun building a comprehensive plug-and-play product that utilizes new technology to solve this problem in a unique and exciting way. We're building a server-side UI rendering engine that allows us to build UI and push it to user devices as HTML or native device views. Meaning we can build UI once, and our customers can use that UI in their apps without writing a single line of code. The "server-side frontend" product will produce UI for not only web apps but for native iOS and Android apps as well.

Opportunities for customer engagement conversational chat

ChatKitty is a chat company, and we recognize the opportunities AI-powered chat creates for our customers. One area where AI has proven to be particularly useful is in the development of AI assistants. AI assistants are computer programs that use natural language processing and machine learning algorithms to interact with users and provide assistance with various tasks. Here are some of the benefits of integrating AI assistants into applications:

Reading and answering questions

One of the most significant benefits of AI assistants is their ability to read and answer lead and customer inquiries. With advanced natural language processing capabilities, these assistants can understand and respond to user questions in seconds, providing instant solutions to their problems. This functionality is particularly useful in customer service and support, where timely and accurate responses are critical to customer satisfaction.

For example, by integrating a ChatGPT-powered chatbot into our demos, we have improved response times and provided potential customers with faster, more accurate solutions to their queries. Not only does this increase lead conversation, but it also reduces the workload on our lead generation team, allowing them to focus on more complex tasks.

Translation

Another significant benefit of AI assistants is their ability to translate languages. With the global nature of business today, it is essential to communicate with customers and clients in their language. By integrating translation functionality into our applications, we can communicate effectively with customers regardless of their location or language.

Summarization

Businesses can also use AI assistants to summarize large amounts of data quickly and efficiently. This functionality is handy for companies that deal with large volumes of data, such as market research or financial analysis. Automating the summarization process can save valuable time and resources, allowing us to focus on more critical tasks.

Customer support chatbots

Perhaps one of the most significant benefits of AI assistants is their ability to provide customer support through chatbots. Chatbots can handle a wide range of customer inquiries, from basic product information to complex technical support. By automating the customer support process, we can provide 24/7 support, improve response times, and reduce costs.

Because language models ChatGPT can remember and integrate new information without retraining, we can easily customize the models for our specific company and business domain.

Chatbots can provide 24/7 availability, allowing customers to get answers to their questions at any time of day or night, regardless of if human agents are available. By automating responses to common customer inquiries, conversational AI helps reduce the workload of human agents, allowing them to focus on more complex customer issues. Chatbots can provide a cost-effective alternative to hiring and training additional customer service representatives. They can handle multiple customer inquiries simultaneously, reducing the need for additional staff. Ultimately, businesses can provide quick and efficient answers to customer inquiries, increasing customer satisfaction and loyalty.

The current state of AI and large language models

I've been following the development of artificial neural networks for several years now. And the progress made by large language models like ChatGPT, GPT-3, and BERT over the past few years has been quite remarkable.

This breakthrough in the field of natural language processing opens up new opportunities for businesses building interactive user applications. By unlocking new abilities for language translation, chatbots, writing assistance, text summarization, and even code generation, language models like ChatGPT have the potential to transform the way we interact with non-human intelligent systems and reshape our society in ways not seen since the dawn of the internet, and the industrial revolution.

That ChatGPT can generate text so human-like may be unexpected given the way ChatGPT works. Like other language models in the Generative Pre-trained Transformer (GPT) family, ChatGPT uses a Transformer architecture. Transformer neural networks, first described by Google in 2017, are a type of deep learning architecture that has become increasingly popular in natural language processing tasks such as language translation and language modeling. Unlike traditional neural networks that process sequences of input data sequentially, Transformers are designed to process entire sequences of input data in parallel.

Before we can dive into the specifics of Transformers, we'll need to first discuss word embeddings.

Word embeddings

Word embedding is a very interesting concept; it's worth spending a bit of time understanding what word embeddings are and why they are so important for how current state-of-the-art language models work. Before a sequence of text can go through a neural network and be processed, its tokens must be converted to a representation the network is able to understand. Like all neural networks, transformer language models represent inputs, features, and outputs as vectors of numbers (more precisely as arrays of floats). A word embedding is simply an efficient representation of a word or token as a numerical vector of certain length that captures some "meaning" of that word relative to other words in a language. With word embeddings, words that are used in similar contexts are represented closer to each other, than words which tend to used in different contexts.

Each dimension of the vector represents a different feature or aspect of the word. Word embeddings are typically learned from large amounts of text data using algorithms like Word2Vec or GloVe. Once trained, these embeddings can be used as input to neural networks for a variety of natural language processing tasks.

Word embeddings can be used to compare the meaning of words in different contexts, and to identify relationships between words. For example, if we have a vector for "cat" and a vector for "dog", we can measure the distance between these two vectors to determine how similar they are semantically. We can expect that the vectors for "dog" and "cat" be very similar, near a vector for "pet", while the vector for "car" should be quite different. In addition to representing words, word embeddings can also represent phrases, and the relationships between them. This makes it easier for neural networks to learn patterns and relationships between words, and is particularly useful when it comes to natural language processing tasks such as sentiment analysis, question answering, and machine translation.

Note that strictly, GPT models does not deal with words, but rather with tokens, which approximate to words. A token here refers to common sequence of text characters that can easily be assigned a meaning. A token might be a whole word like "cat", or meaningful fragments like "ing", or "ly", or "ed". Tokens make it easier for neural networks to understand compound or non-standard words, and even other languages. After "tokenizing" the input text, an embedding algorithm like Word2Vec then converts these tokens into embeddings.

Transformers

The Transformer was introduced by Vaswani et al. in "Attention Is All You Need" and has quickly became one of the most popular architectures for NLP tasks. Language models are probability distributions over sequences of words (or tokens) in a language. This means their primary function is predicting the likelihood of a particular word being the next word for any sequence in the language.

For a language model to be good at predicting likely next words for a given sequence, it needs to be able to look back in the sequence to remember the context and the semantics of previously seen tokens, in other it needs memory. There are long-term dependencies of the beginning of meaningful sentences, at the end of a sentence. For example, to complete the sentence "Jack came to the pub to have a drink and I talk to ", we'd expect the next word in the sequence to be noun or a pronoun, "him", "her", etc.

However, the relevant piece of information is the word "Jack", all the way at the beginning of the sentence. A good language model needs to be able to remember "Jack", and return a probable next token associated with "Jack" in the language, "Jack came to the pub to have a drink and I talk to him". Without the ability to remember relevant context in a text sequence, the language model is likely to generate sentences that aren't meaningfully although syntactically valid (for example "Jack came to the pub to have a drink and I talk to John").

Attention

In other to learn from massive dataset efficiently, and generate sequences of text, language models need a way of deciding which parts of input sequences are more important, thereby assigning different weights to different parts of the input (as numeric vectors). By assigning greater weight to certain parts of the input, the model can be thought of as "paying attention" to those parts.

The key innovation of the Transformer architecture is its so-called "self-attention" mechanism. Self-attention works by computing a score between every pair of elements in the input sequence. The score is computed by multiplying the vectors representing the two elements. The model then uses the scores to determine which elements it should "pay attention".

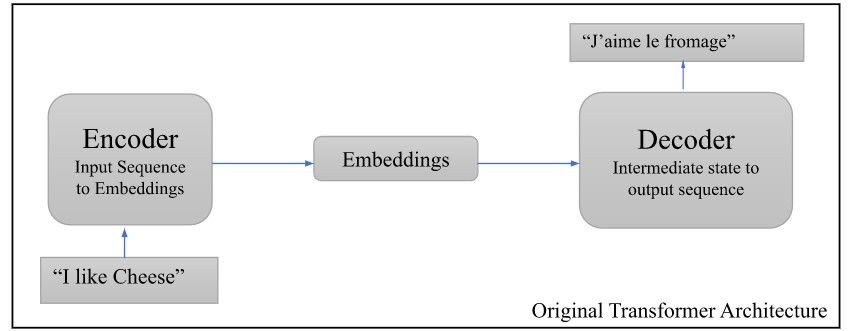

In addition to self-attention, the Transformer architecture also uses an encoder-decoder architecture, which enables the model to learn from input sequences of varying lengths. The encoder reads in the input sequence one element at a time and produces a vector representation of the entire sequence. The decoder then uses this vector representation to generate the output.

Original Transformer Architecture. Image credit: Kindra Cooper/"OpenAI GPT-3: Everything You Need to Know"

By using self-attention and an encoder-decoder architecture, Transformer models like ChatGPT are capable of learning from large datasets and generating coherent sequences of text.

Integrating ChatGPT and beyond into our chat platform

Last week, OpenAI introduced APIs for its ChatGPT and Whisper models, giving developers access to its state-of-the-art language and speech-to-text generative AI capabilities. The same day I was able to integrate ChatGPT as an AI assistant into an application powered by ChatKitty.

ChatKitty provides Chat Functions, an event-driven serverless framework for extending our platform to handles use-cases that even we can't imagine. With ChatGPT your AI assistant can help your users draft emails, write code, answer questions, translate messages, summarize chats, and much more.

Summary

I'm excited about the opportunities created by AI-powered chat, for customer engagement and communication. The progress made by large language models using the Transformer architecture like ChatGPT, GPT-3, and BERT is truly remarkable, unlocking new abilities for language translation, chatbots, writing assistance, text summarization, and code generation. Although current large language models are far from perfect, with the help of AI-powered chat conversation, businesses can communicate with customers in a more natural and personalized way, improving engagement and providing better customer service. Issues regard AI safety, potential biases, hallucinations and non-factual results need to be considered, but I'm confident that with continued advances in AI, machine learning and natural language processing, AI-powered chat will be a powerful tool for businesses to engage with customers and increase customer satisfaction.